TRAINING AND PREPARING DATA SET

In recent times, advanced computer programs like OpenAI’s GPT series have transformed the way we process natural language. These programs can produce human-like responses to various questions, making them very useful for businesses.

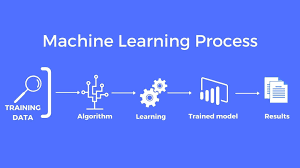

Building AI models can be a lengthy and resource-intensive task, particularly when working with huge amounts of data. Luckily, OpenAI’s API offers many resources and tools to help manage large datasets and optimize GPU usage during the training process. This post will examine some of the best practices and methods for building AI models using OpenAI’s API. It will cover topics such as handling large datasets and fine-tuning pre-existing models.

Are you tired of the traditional 9 to 5 job routine? Do you want to earn more money while enjoying the freedom of working from anywhere in the world? Then, “Profitable Employment by Engaging in Freelancing” is the book for you!

In this groundbreaking book, you will discover the new trend of remote working and how you can become a new-minted millionaire by freelancing on platforms such as Upwork.com, Fiveer.com, Guru.com, Toptal, Jooble, Freelancer.com, Flexjobs, SimplyHired, LinkedIn, Behance, and many more. The author shares insider tips and tricks on how to succeed in the competitive world of freelancing, including how to find high-paying clients and how to negotiate your rates.

This book is a must-read for anyone who wants to earn a lucrative income while enjoying the flexibility of working from home. So, what are you waiting for? Buy this book now and start your journey toward financial freedom and a fulfilling career!

Dealing with big datasets is a tough task when training AI models. If the dataset is too big to fit into memory, you have to use techniques like batch loading and data shuffling to load and process the data efficiently during training. OpenAI’s API has many resources and tools that can help you handle large datasets. These tools include:

- Data augmentation: Data augmentation means adding more training data by applying random changes to the current data points. It can help to increase the variety of your training data and reduce overfitting.

- Data shuffling: Data shuffling is a method where you randomly change the order of your training data before every round of training. This can help to minimize bias and improve your model’s generalization performance.

- Batch loading: Batch loading is simply a process of loading small batches of data into memory during training as an alternative to loading the entire dataset at once. This process often helps to save memory and improve the performance of training.

What is OpenAI?

OpenAI is a group of knowledgeable individuals who work in artificial intelligence research. They want to create high-level AI models and technologies that can help people and tackle difficult issues. OpenAI provides several resources, including tools, APIs, and services, to help researchers and developers make applications and systems that use AI. OpenAI is a good choice if you want to train your data accurately. Their advanced technology and algorithms provide useful tools and APIs that can assist you in achieving your objectives.

Follow this step-by-step guide to learn how to use OpenAI to train your dataset with the utmost accuracy.

Step 1: Identifying the problem you want to solve

To begin training a set of data, the initial action is to recognize the issue you want to address. This may range from processing natural language to recognizing images to forecasting analytics. For instance, if you’re involved in making a chatbot that can assist users with technical problems in a software item, then your objective is to develop a chatbot that can comprehend user inquiries and offer useful solutions.

Step 2: Preparing your dataset

After figuring out the issue that needs to be solved, the next step is to prepare the dataset. This includes removing any unnecessary data, such as outliers or irrelevant points, and formatting the data in a way that OpenAI’s models can use it.

Example: In the chatbot project, a dataset of customer inquiries and responses from different sources needs to be collected. The data should be cleaned to get rid of unimportant details like timestamps or customer identification. Additionally, the data must be organized in a way that can be utilized for training.

Are you tired of the traditional 9 to 5 job routine? Do you want to earn more money while enjoying the freedom of working from anywhere in the world? Then, “Profitable Employment by Engaging in Freelancing” is the book for you!

In this groundbreaking book, you will discover the new trend of remote working and how you can become a new-minted millionaire by freelancing on platforms such as Upwork.com, Fiveer.com, Guru.com, Toptal, Jooble, Freelancer.com, Flexjobs, SimplyHired, LinkedIn, Behance, and many more. The author shares insider tips and tricks on how to succeed in the competitive world of freelancing, including how to find high-paying clients and how to negotiate your rates.

This book is a must-read for anyone who wants to earn a lucrative income while enjoying the flexibility of working from home. So, what are you waiting for? Buy this book now and start your journey toward financial freedom and a fulfilling career!

Step 3: Training your dataset using OpenAI

Once your data is ready, you can start teaching it using OpenAI’s advanced technology. OpenAI has many tools and APIs that can be utilized for various types of data and problems.

For instance, you could use OpenAI’s GPT-3 language model to process natural language or its DALL-E API to create images. These tools offer potent algorithms and pre-built models that can be customized to your specific needs.

To teach your data, you will need to submit it to OpenAI’s algorithms and establish the parameters for the training process. This could include details such as the number of times the training occurs, the learning rate, and the model’s size. While teaching your data, you must keep track of its accuracy and tweak your model if necessary. OpenAI provides powerful tools for evaluating your model’s performance and finding areas for improvement.

Step 4: Testing and refining your model

After you have finished training your dataset, you must check if it works well on real-world data by testing it. Based on the results of the test, you may need to improve your model further.

Example: In the chatbot project, you can examine your model’s performance by providing it with different customer inquiries and measuring its responses. If you notice that the model’s performance is not satisfactory, you may need to modify the training parameters or train it again with more data.

Step 5: Using the dataset gotten to solve real-world problems

After you have trained and improved your dataset, you can start using it to solve practical issues. OpenAI’s models can be incorporated into various applications and platforms, enabling you to use your dataset to make precise forecasts, perform automated tasks, and achieve more.

Example: You could integrate your refined model into the chatbot app for the chatbot project, which customers can utilize to resolve technical problems. The chatbot would leverage your trained dataset to comprehend customer inquiries and deliver useful answers.

Are you tired of the traditional 9 to 5 job routine? Do you want to earn more money while enjoying the freedom of working from anywhere in the world? Then, “Profitable Employment by Engaging in Freelancing” is the book for you!

In this groundbreaking book, you will discover the new trend of remote working and how you can become a new-minted millionaire by freelancing on platforms such as Upwork.com, Fiveer.com, Guru.com, Toptal, Jooble, Freelancer.com, Flexjobs, SimplyHired, LinkedIn, Behance, and many more. The author shares insider tips and tricks on how to succeed in the competitive world of freelancing, including how to find high-paying clients and how to negotiate your rates.

This book is a must-read for anyone who wants to earn a lucrative income while enjoying the flexibility of working from home. So, what are you waiting for? Buy this book now and start your journey toward financial freedom and a fulfilling career!

Conclusion

To achieve the most accurate results when training a dataset, careful planning, powerful algorithms, and ongoing monitoring and adjustment are necessary. Fortunately, with OpenAI’s advanced technology and tools, training a dataset with high accuracy has become more accessible than ever before. Whether you’re working on an image recognition application, natural language processing project, or predictive analytics tool, OpenAI has the capability and technical know-how to achieve the objectives of the project. Therefore, if you want to train a dataset with the highest accuracy, OpenAI is the ideal solution.

Important Affiliate Disclosure

We at culturedlink.com are esteemed to be a major affiliate for some of these products. Therefore, if you click any of these product links to buy a subscription, we earn a commission. However, you do not pay a higher amount for this. The information provided here is well-researched and dependable.