EXPLORING THE ROLE OF INSTRUCTGPT IN ENABLING CHATGPT’S ADVANCED NATURAL LANGUAGE PROCESSING ABILITIES

Generative Pre-trained Transformer (GPT) is an AI system developed by OpenAI that can perform tasks like answering questions, translating languages, creating chatbot conversations, and generating computer code.

GPT versions are developed by making changes to the logic and connections in the neural network architecture of AI, and by using different amounts of data (such as text and images) to train the system. GPT-1, launched in 2018, was trained on over 100 million text examples, while GPT-2 used over a billion examples. GPT-3 used a whopping 150 times more data than GPT-1, making it a breakthrough in the field of “large language model” AI systems.

What is InstructGPT?

InstructGPT is a new version of the GPT-3 language model made by OpenAI. It is designed to learn from human feedback and improve its accuracy. This is achieved through reinforcement learning where feedback is used to adjust and refine the model.

Are you interested in turning your expertise into a profitable business? Look no further than “Making Money by Teaching Others What You Know,” a comprehensive guide to creating and selling your own online courses.

With this book, you’ll learn how to identify your target audience, create engaging content, and market your courses effectively on popular platforms like Teachable, Kajabi, Coursera, Udemy, YouTube, MasterClass, Google Skillshop, LinkedIn Learning, Codecademy, Skillshare, HubSpot Academy, and many more.

So what are you waiting for? Invest in your future and take the first step towards building a successful online teaching business. Buy this book now and start your journey to financial freedom!

From GPT-3 to IntructGPT

GPT-3 is an important milestone in the field of artificial intelligence. It introduces a new type of architecture called a transformer, which enables machine-learning models to become general-purpose engines. The transformer architecture, also known as the transformer neural network or transformer model, is designed to handle sequence-to-sequence tasks while effectively managing long dependencies.

OpenAI has discovered several other components that can be added to large language models to enhance their effectiveness. OpenAI found that in-context learning through prompting and human feedback is an effective way to improve the GPT model. As a result, InstructGPT was created.

InstructGPT is the technology that powers ChatGPT. Its main difference from GPT is that it employs a human feedback approach during fine-tuning, where humans provide a set of desired outputs to the model. In the InstructGPT framework, humans work with a smaller dataset and interact with the model in a few ways. Firstly, they produce the desired output, and secondly, they compare it with the output generated by the model. Thirdly, they label the output produced by the model based on their feedback. Finally, they use the feedback to guide the model toward desired outcomes for specific tasks and types of questions.

InstructGPT has become a standard technology within OpenAI, and this is how it differs from the original GPT model.

Understanding InstructGPT

InstructGPT is a new version of the GPT-3 language model developed by OpenAI in response to users’ feedback. This model has been improved to address the issues that were identified in the previous version.

- InstructGPT is better at comprehending and executing English instructions than the previous version.

- Unlike the previous version, InstructGPT is designed to produce more accurate and reliable information, reducing the spread of false or misleading information.

- InstructGPT is less prone to generating harmful or offensive content, unlike the previous version which had a higher likelihood of producing such results.

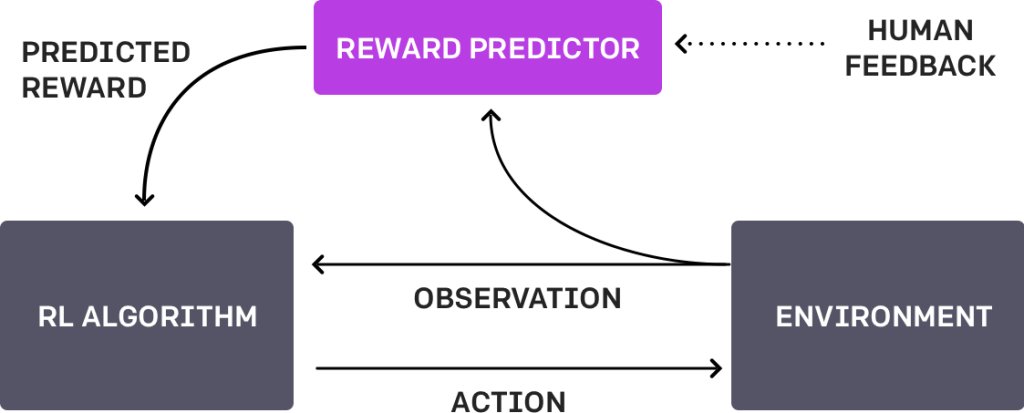

GPT-3 was designed to predict the next word from a big collection of data, but it wasn’t safe to perform specific tasks that the user wanted. OpenAI addressed this problem by using reinforcement learning from human feedback (RLHF).

When an AI agent uses reinforcement learning, it learns to make decisions by doing things in an environment and receiving rewards or punishments. OpenAI explained how this method was important for creating safe AI systems in 2017. However, it also turned out to be useful for making AI systems better at certain tasks.

The OpenAI team didn’t discover the idea of reinforcement learning from human feedback. Instead, it was something that academics had already accomplished. However, the OpenAI team did well in improving this approach. They taught an algorithm how to do a backflip using 900 feedback from a human evaluator. Even though it might seem like a minor accomplishment for an easy task, it was the start of something significant, such as ChatGPT.

Are you interested in turning your expertise into a profitable business? Look no further than “Making Money by Teaching Others What You Know,” a comprehensive guide to creating and selling your own online courses.

With this book, you’ll learn how to identify your target audience, create engaging content, and market your courses effectively on popular platforms like Teachable, Kajabi, Coursera, Udemy, YouTube, MasterClass, Google Skillshop, LinkedIn Learning, Codecademy, Skillshare, HubSpot Academy, and many more.

So what are you waiting for? Invest in your future and take the first step towards building a successful online teaching business. Buy this book now and start your journey to financial freedom!

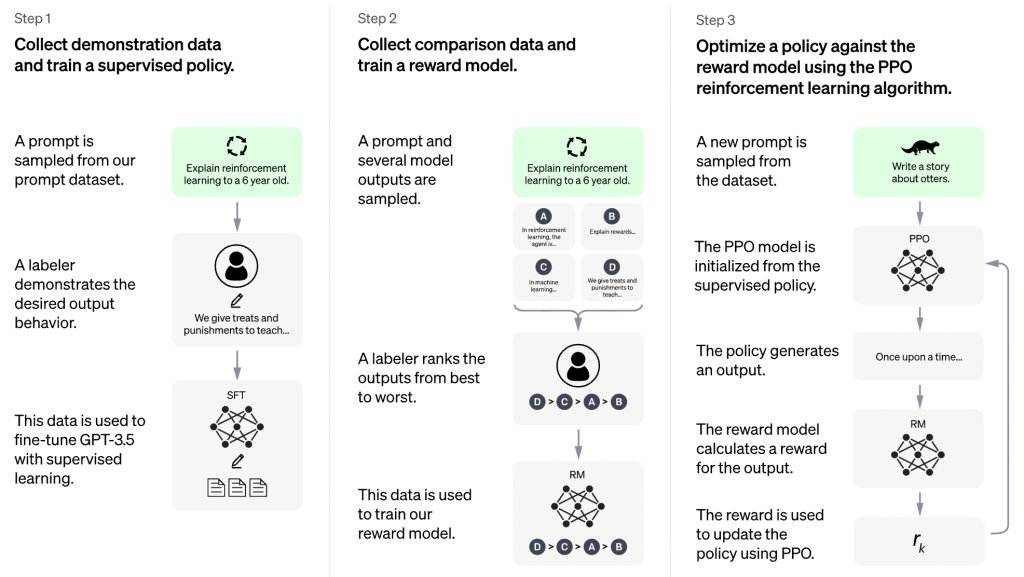

There Are Three Steps In InstructGPT Training

The RLHF process is a 3-step feedback loop involving the person, reinforcement learning, and the model’s comprehension of the goal. Each of the steps will be explained to understand the process better.

Step 1 of the process requires collecting human-written examples and training a supervised policy.

After selecting a prompt from a dataset, a person shows the desired output behavior. GPT-3 users can submit these, but researchers from OpenAI can guide them based on written instructions, informal discussion, and feedback on specific examples when required. These demonstrations are then used to improve GPT-3 by training supervised learning baselines.

Step 2 involves collecting comparison data and training the reward model.

A dataset is created where humans rate the output from several model samples to determine which one is better. The reward model (RM) is then trained based on this data to show OpenAI’s preferred output.

In step 3, the reward model becomes a reward function to fine-tune the GPT-3 policy.

A new prompt is selected from the dataset, and the policy generates output based on the previous steps. The output is then evaluated to calculate a reward that is optimized using the company’s Proximal Policy Optimization (PPO) algorithm. This process improves InstructGPT’s ability to follow instructions.

InstructGPT vs. GPT-3

Despite having fewer parameters than its base model, OpenAI users prefer using Instruct GPT-3 as their model of choice. OpenAI stated that their model’s academic performance in natural language processing evaluations is not compromised while using Instruct GPT-3, showing that its capabilities remain intact.

The InstructGPT models were in a testing phase on the API for more than a year, but now they have become the standard language models. OpenAI thinks that involving human input to improve the models is the most effective way to ensure they are dependable and secure.

Are you interested in turning your expertise into a profitable business? Look no further than “Making Money by Teaching Others What You Know,” a comprehensive guide to creating and selling your own online courses.

With this book, you’ll learn how to identify your target audience, create engaging content, and market your courses effectively on popular platforms like Teachable, Kajabi, Coursera, Udemy, YouTube, MasterClass, Google Skillshop, LinkedIn Learning, Codecademy, Skillshare, HubSpot Academy, and many more.

So what are you waiting for? Invest in your future and take the first step towards building a successful online teaching business. Buy this book now and start your journey to financial freedom!

Conclusion

InstructGPT is a new language model created by OpenAI to replace GPT-3. People were unhappy with the harmful results produced by GPT-3, so OpenAI developed InstructGPT to address this issue. OpenAI used a technique called reinforcement learning from human feedback (RLHF) to solve the problem. This involves a 3-step process where a human, the model, and reinforcement learning work together to achieve a goal.

InstructGPT3 is the preferred model for OpenAI’s labelers, even though it has 100 times fewer parameters. It is important to note that despite the smaller size, InstructGPT3 performs better.

Important Affiliate Disclosure

We at culturedlink.com are esteemed as a major affiliate for some of these products. Therefore, if you click any of these product links to buy a subscription, we earn a commission. However, you do not pay a higher amount for this. The information provided here is well-researched and dependable.